Chapter 3: Git for the Agentic Age

AgentSpek - A Beginner's Companion to the AI Frontier

This commit message tells a story that would have been impossible five years ago—a record of human-AI collaboration that produced something neither could have created alone. Git was built for human-paced development, but AI changes all of these assumptions.

“We can only consider a limited number of things at once. So the question is: how do we factor our programs to take the complexity that’s not going away and write it in such a way that when we look at any given part of it, we can understand it?” - Rich Hickey, “Simple Made Easy” (2011)

The Commit That Changed Everything

commit 7f8a9b2c1e4d6f9a2b5a8c1f4d7a0b3c6e9f2a5c

Author: j@joshuaayson.com

Co-authored-by: Claude <noreply@anthropic.com>

Date: Tue Oct 15 14:32:18 2024 -0700

Add content processing pipeline with graph database integration

Claude designed the Neo4j relationship schema and implemented

the core ETL logic. I refined the error handling and optimized

for our specific content structure.

Generated with Claude CodeThis commit message tells a story that would have been impossible five years ago.

It’s not just code. It’s a record of human-AI collaboration that produced something neither could have created alone.

Look closer and you’ll see something else: this isn’t just good software engineering practice adapted for AI. It’s an entirely new way of thinking about version control, attribution, and the evolution of ideas.

Git was built for human-paced development, emerging from Linus Torvalds’ frustration with existing version control systems in 2005. “I’m an egotistical bastard, and I name all my projects after myself,” he famously quipped. “First Linux, now git.” But beneath the humor was serious intent: to create a distributed system that could handle the Linux kernel’s development pace. AI changes all of these assumptions. When your pair programming partner can generate a thousand lines of tested code in thirty seconds, when experimentation costs approach zero, when you can explore dozens of architectural approaches in the time it used to take to implement one, your version control strategy needs to evolve.

This chapter isn’t about adding AI features to your existing Git workflow. It’s about reimagining version control for an age where the boundary between human and machine contributions isn’t just blurred, it’s irrelevant. What matters is building software that works, with a historical record that tells the truth about how it came to be.

Rethinking the Commit: Semantic History in the AI Era

The fundamental unit of Git is the commit, but what should a commit represent when AI can generate substantial changes in seconds?

Classic Git philosophy emphasizes commits as logical units of change. One feature, one commit. Atomic changes that can be safely reverted. Clear, descriptive messages that explain the “why.” This echoes what Alan Perlis, the first Turing Award winner, advocated in 1958: “Every program should be a structured presentation of an algorithm.” Version control extended this principle to the evolution of programs. This model assumes human development speeds and human cognitive limitations. A typical feature might take hours or days to implement, resulting in commits that represent meaningful chunks of developer time and thought.

With AI assistance, the relationship between time and logical units breaks down completely.

Consider this: what used to take from 10 AM to 5 PM now happens between 10:00 and 10:25.

You describe the feature to AI. Review the generated implementation. Refine edge case handling. Verify tests and documentation. Done.

The same logical unit of work happens ten times faster.

Should this be one commit or five? The answer depends on what story you want your Git history to tell.

Instead of time-based commits, what if we organized around semantic intentions?

Think about intent commits that capture the human decision-making process. Your commit message serves as a record of architectural decisions: what authentication approach to use, how long sessions should last, which features to build now versus later, where to store sensitive data. You note that AI suggested technical implementation patterns and security best practices while human decisions focused on user experience and business priorities.

Then there are implementation commits that capture the technical execution. Token handling, secure credential storage, session management, input validation, error handling and logging. All co-authored, all collaborative, all honest about how the code came to be.

And refinement commits capture the iterative improvement process. You add rate limiting to login endpoints, improve error messages for better UX, handle edge cases for password reset flow, add comprehensive test coverage. Human review identified security gaps in the initial AI implementation. The collaborative refinement process continues.

This approach preserves both the human creative process and the technical implementation details while maintaining clear attribution. It tells the truth about how modern software actually gets built.

Branch Strategies for AI Experiments

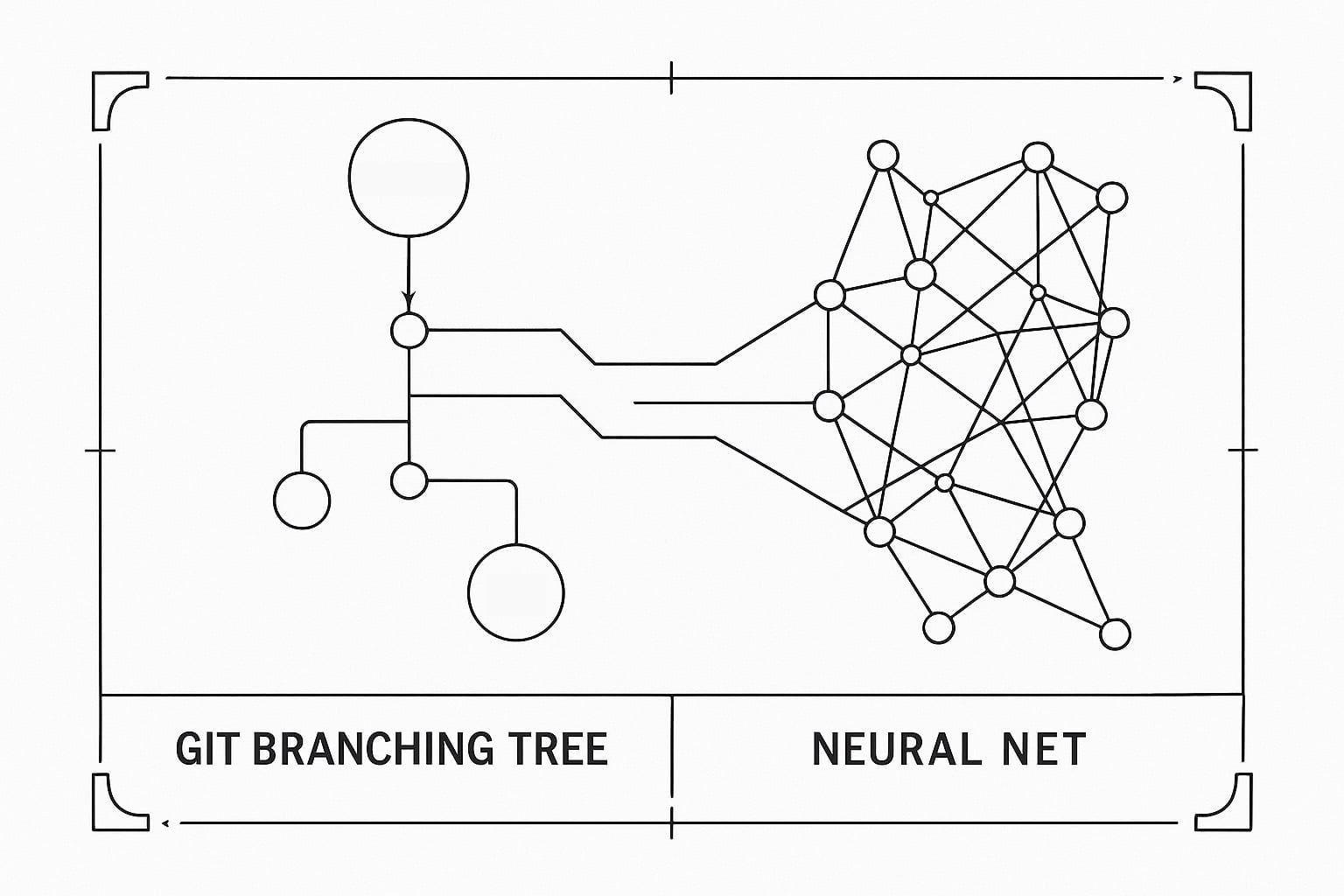

Traditional branching strategies like Git Flow and GitHub Flow assume that branches represent significant development effort and that merging represents risk. These strategies evolved from earlier models. In the 1968 NATO Software Engineering Conference report, they were already discussing the “software crisis” and the need for systematic approaches to managing code changes. AI collaboration changes both assumptions fundamentally, making the cost of branching approach zero and the risk of experimentation negligible.

When experimentation grows cheap, the number of viable approaches to any problem increases dramatically. Consider implementing a data processing pipeline. The traditional approach moves linearly: research, design, implement, test, refine. The AI-augmented approach? Parallel exploration of multiple implementations.

You might explore different architectural approaches simultaneously. Create a branch for streaming, another for batch processing, a hybrid approach, maybe even a serverless variant. Each can be fully implemented and tested in an hour or two. Instead of choosing upfront, you can try them all. This pattern, parallel exploration with rapid implementation, requires new branching strategies.

Think of branches as hypotheses, not commitments. Create an experimental branch with clear naming, implement with AI assistance, evaluate the approach. Keep the learnings, not necessarily the code. Document your findings. Maybe passkey implementation revealed UX and compatibility issues. Not suitable for your current user base, but the technology is promising for future consideration. The branch remains for future reference but isn’t merged. That’s valuable too.

When exploring multiple approaches, you often discover that the best solution combines insights from several experiments. After exploring multiple pipeline approaches, you might cherry-pick the streaming core from one experiment, the batch processor from another, the integration tests from a third. AI helps integrate the disparate pieces while human judgment selects which pieces to combine. The final implementation might not come from any single experimental branch, but rather from the synthesis of multiple explorations.

Consider maintaining a dedicated branch for AI collaboration artifacts, a living record of the decision-making process. Document prompts that led to breakthroughs, capture architectural decisions and their rationale, preserve failed experiments and lessons learned. This branch transforms into a knowledge repository, not just code. It’s where learning lives.

The New Commit Message: Attribution in the Age of Collaboration

Who wrote this code? The question used to have a simple answer. Now it’s complicated, and our commit messages need to reflect that complexity.

Traditional attribution was straightforward. You wrote it, you commit it, your name is on it. But what about code that emerges from conversation with AI? What about implementations you directed but didn’t type? What about refinements suggested by AI but selected by humans?

We need new conventions that tell the truth. When AI generates the initial implementation, say so. When human review catches critical issues, document that. When the final solution emerges from multiple rounds of human-AI collaboration, the commit message should reflect that reality.

Consider this format for collaborative commits. Start with the what, the standard description of changes. Follow with the how, documenting the collaboration process. AI provided initial algorithm design, human adjusted for performance constraints, AI suggested optimization strategies, human selected based on maintainability. Finally, add the why, the human decisions and context. Chose this approach for better user experience, prioritized readability over micro-optimizations, designed for future extensibility.

This level of detail might seem excessive, but it serves multiple purposes. It helps future developers understand not just what changed but how the solution was discovered. It provides learning opportunities for teams adopting AI collaboration. It maintains honest attribution in a world where “authorship” is increasingly complex. And it creates a historical record of how human-AI collaboration evolves.

Continuous Integration in the Age of Continuous Generation

CI/CD pipelines were built for a world where code generation was the bottleneck. Now that AI can generate code faster than pipelines can test it, we need new strategies.

The traditional pipeline assumes sequential validation: commit, build, test, deploy. Each stage gates the next. This made sense when commits were precious and generation was slow. But when you can generate ten valid implementations in the time it takes to run your test suite once, the pipeline turns into the bottleneck.

What if we inverted the relationship? Instead of testing after generation, what if we tested during exploration? AI generates implementation with embedded tests, runs lightweight validation during generation, provides confidence scores for generated code, and identifies areas needing human review. The pipeline transforms into a collaborator, not a gatekeeper.

Think about parallel validation strategies. While one implementation runs through comprehensive tests, AI generates alternatives. While integration tests run, AI explores edge cases. While performance benchmarks execute, AI optimizes critical paths. The pipeline and generation process interleave, each informing the other.

This requires new tooling, but more importantly, it requires new thinking. Tests aren’t just validation, they’re specification. Pipelines aren’t just automation, they’re feedback loops. Deployment isn’t just release, it’s experimentation.

The Social Dynamics of AI Commits

How do teams react when half the commits are co-authored by AI? How do you review code you didn’t write and your colleague didn’t entirely write either? These aren’t just technical questions, they’re social ones.

Some developers feel threatened when AI-generated code performs better than their handcrafted solutions. Others feel liberated from tedious implementation work. Some worry about attribution and credit. Others embrace the collaborative model. These tensions are real and need addressing.

Code review changes fundamentally when AI is involved. You’re not just reviewing code, you’re reviewing decisions. Why did you accept this AI suggestion but reject that one? What assumptions did the AI make that might not hold? What edge cases might both human and AI have missed? The review shifts toward judgment over syntax.

Teams need new norms around AI collaboration. When is it appropriate to use AI generation? How much AI involvement requires disclosure? Who’s responsible when AI-generated code has bugs? How do we maintain code ownership and pride in craftsmanship? These aren’t questions with universal answers, but every team needs to address them.

Trust dynamics shift when AI enters the picture. Can you trust code you didn’t write? Can you trust code your colleague didn’t fully write? How do you build confidence in AI-assisted development? The answer isn’t blind trust or constant suspicion, but thoughtful validation and gradual confidence building.

Practical Patterns for Today

While we’re philosophizing about the future of version control, you still have code to ship today. Here are patterns that work right now.

The Exploration Sprint pattern dedicates time explicitly to AI-assisted exploration. Create a two-hour block, generate multiple implementations, document learnings, select the best approach or synthesis. This legitimizes experimentation and prevents endless exploration.

The Human Touch Points pattern identifies moments where human judgment is essential. Architecture decisions, user experience choices, security review, performance trade-offs. AI assists everywhere, but humans make the calls that matter.

The Living Documentation pattern treats documentation as continuous, not eventual. AI generates initial docs with the code, humans refine for clarity and context, documentation evolves with implementation, and updates happen in the same commits as code changes.

The Honest History pattern commits to truthful attribution. Document AI involvement clearly, credit human decisions explicitly, preserve the collaborative nature of development, and maintain records for learning and improvement.

Where This Goes

Git was revolutionary because it distributed version control. Every developer has the full history. Every clone is complete. This democratized development in ways we’re still discovering.

AI collaboration might be similarly revolutionary. Every developer has an intelligent partner. Every problem has multiple solutions. Every experiment is affordable. This democratizes capability in ways we’re just beginning to explore.

But it also raises questions. If everyone can generate code, what distinguishes developers? If AI can implement anything, what’s worth implementing? If experimentation is free, how do we decide when to stop exploring? These aren’t problems to solve but tensions to navigate.

The future of version control isn’t just about managing code, it’s about managing the collaboration between human creativity and machine capability. It’s about preserving the story of how software comes to be in an age where that story is increasingly complex.

Your next commit will be part of this evolution. How will you tell its story? What truth will you preserve? What future will you build?

The tools are ready. The patterns are emerging. The only question is: what will you create with them?

And isn’t that always the question, in the end?

Sources and Further Reading

The chapter’s opening quote from Rich Hickey’s “Simple Made Easy” (Strange Loop, 2011) about managing complexity is particularly relevant to version control in AI development. His insight that we can only consider a limited number of things at once drives the need for clear git workflows.

The historical perspective draws on Alan Perlis’s epigrams on programming (1982) and insights from the NATO Software Engineering Conference (1968), where version control challenges were first formally discussed at scale.

For those interested in the evolution of version control, the story of Git’s creation by Linus Torvalds (2005) provides context for understanding how distributed version control revolutionized software development, setting the stage for today’s AI collaboration patterns.

The ethical considerations around attribution echo themes from Weizenbaum’s “Computer Power and Human Reason” (1976), particularly his concerns about transparency in human-machine collaboration.

Next: Chapter 4: Agent Mode (The Way That Works)

← Previous: Chapter 2 | Back to AgentSpek

© 2025 Joshua Ayson. All rights reserved. Published by Organic Arts LLC.

This chapter is part of AgentSpek: A Beginner’s Companion to the AI Frontier. All content is protected by copyright. Unauthorized reproduction or distribution is prohibited.