Chapter 13: The Value Equation

There's a moment when you realize you're not paying for tools anymore. You're paying for time. More specifically, you're paying to buy yourself back.

13 posts

There's a moment when you realize you're not paying for tools anymore. You're paying for time. More specifically, you're paying to buy yourself back.

Working with AI doesn't just teach you new things. It reveals how much of what you 'know' is shallow, contextual, or simply wrong. And it happens at a pace that's psychologically disorienting.

When you have unlimited patience from your AI teammate, you grow more patient with your human teammates. When you can iterate rapidly on ideas with AI assistance, you become less precious about any particular approach with humans.

There's a moment when you realize you're not just using AI anymore. You're conducting an orchestra of intelligences, each with its own voice, its own strengths, its own way of seeing the world.

The code was AI-generated. Beautifully elegant. It had sailed through review, passed all tests. And it had a subtle flaw that only manifested when two processes collided in production. The quality paradox: code can be technically perfect and still fail in ways you never imagined.

There's a moment when time stops making sense. When the normal relationship between effort and output breaks down completely.

There's something profound about waking up to work that was done while you slept. Not just done, but done with a thoroughness that makes you question your own approach to problem-solving.

There's a moment when you realize you're no longer programming computers. You're programming intelligence itself. Not through code but through clear expression of intent. Not through syntax but through structured thought.

There's a particular kind of clarity that emerges from conversation. Not the false clarity of a quick answer or a copied solution, but the deep understanding that comes from having your assumptions questioned, your blind spots illuminated, your half-formed thoughts given shape.

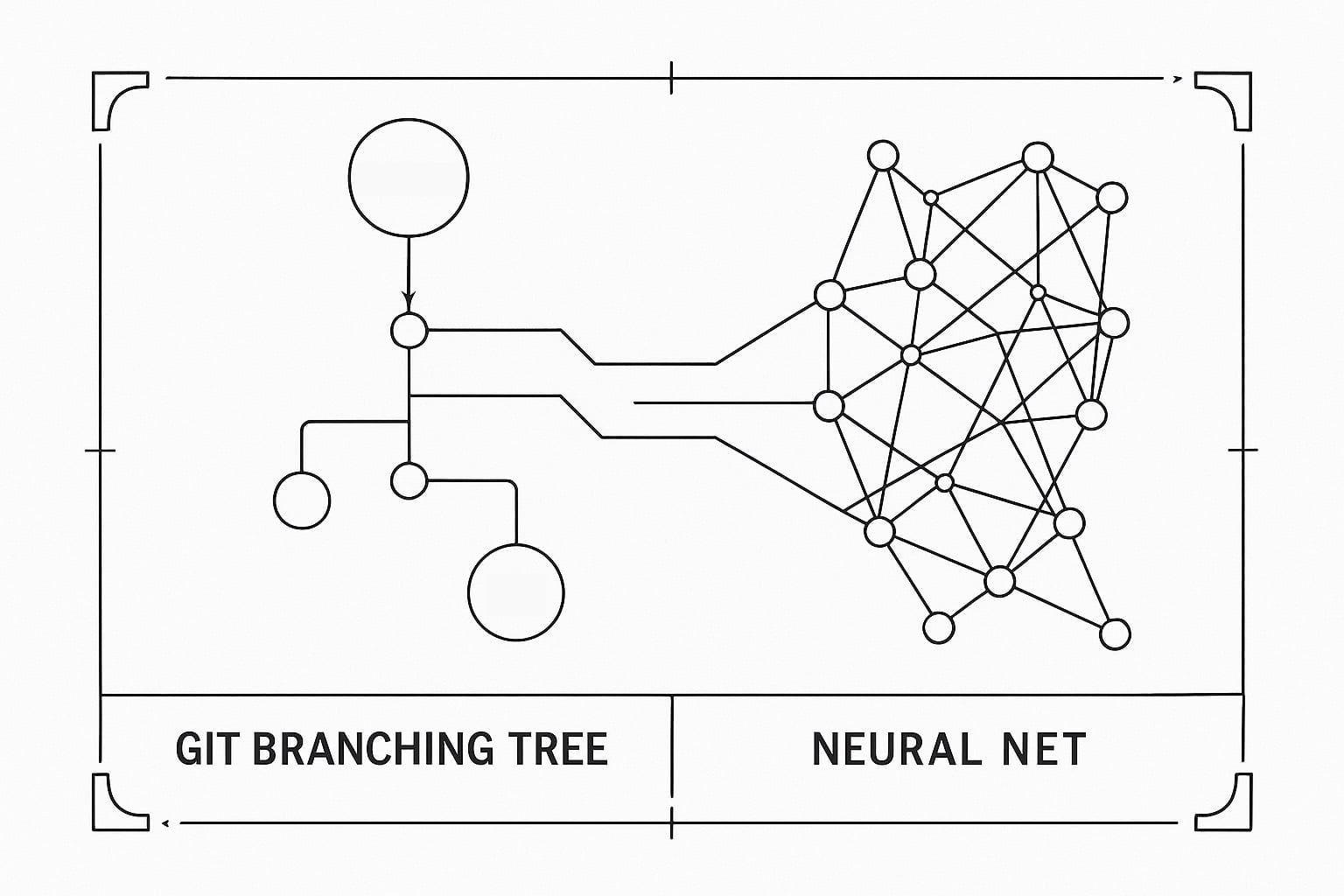

I don't think in fragments. I don't think in lines. I think in systems. In architectures. In complete solutions to complete problems. So I stopped fighting autocomplete and found what actually works: agent mode.

This commit message tells a story that would have been impossible five years ago. A record of human-AI collaboration that produced something neither could have created alone. Git was built for human-paced development, but AI changes all of these assumptions.

How do you measure the ROI of a tool that makes your team think better? This isn't about faster compilers or better CI/CD pipelines: it's about cognitive amplification that has strange, non-linear returns.

I opened seventeen browser tabs, each one a different AI coding tool promising to revolutionize development. This wasn't about money: it was about the overwhelming paradox of choice in a landscape that changes daily.